Order:

OrderId int pk,

CustomerName varchar(100)

OrderLines:

OrderId int not null fk_refers_to_Order

ProductName varchar(100).

Here's a sample set of data:

Order Table:

| OrderId | CustomerName |

| 1 | Tada |

| 2 | Wada |

Order Details Table:

| OrderId | ProductName |

| 1 | Prod1 |

| 1 | Prod2 |

| 2 | Prod1 |

| 2 | Prod3 |

The generated report should have output in this format:

| OrderId | Product 1 | Product 2 |

| 1 | Prod1 | Prod2 |

| 2 | Prod1 | Prod3 |

So how do you solve it, well here's what I did...loaded the data using for xml explicit so that the OrderLines data is rolled up and added to Order node as children. Wrote an Xsl to iterate through the nodes and print them in a csv format and loaded the csv in xls. Different story that I faced quite a few issues with this approach! Firstly, for xml explicity returns the resultset as a fragment (i.e. there is no root node), during ASP/VB days I could add a dummy root node quite easily in the xml template but I couldn't any easy way of achieving it in ADO.Net! The only approach that I found was on serverside.net which added the dummy root node in the for xml query itself. Once I got the XmlTextReader, I loaded it into a dataset with XmlReadMode.InferSchema and used dataset.WriteXml to write the entire xml to a file (I had to follow this approach just to get the entire xml from "for xml" query, the query analyzer always truncates the results if it exceeds certain characters). With the xml file in hand I wrote some quick n dirty (this operation was one time only and hence there was no need to automate the entire process) xsl in cooktop which would save the generate a csv output.

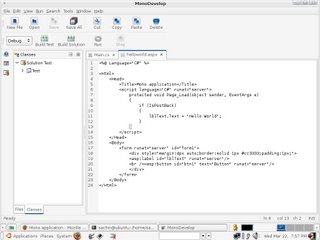

The relevant snippets of code and xsl are below:

XmlTextReader r = (XmlTextReader)cmd.ExecuteXmlReader();

myDataSet1.ReadXml(r,XmlReadMode.InferSchema);

myDataSet1.WriteXml("data1.xml");

Update: Had to remove the xsl listing as it was randomly blewing up my feed! Will put it back on once I figure it out.